Arboreal Intuitions 2023. Exhibited Art Gallery Southwestern Manitoba. 2023. ©Erika Jean Lincoln 2025

In 2020 I began a research project on artificial intelligence (AI) machine learning (ML) and neurodivergent learning. Starting with the question “What is intelligence and where does it lie?” I addressed the dominant narratives surrounding how learning and intelligence is understood within technoscientific cultures.

This research resulted in an article titled “Crip-Techno-Tinkerism: A Neurodivergent Learning Style Meets Machine Learning” published in Leonardo journal (2024) and an interactive installation titled Arboreal Intuitions (2023)

Through Arboreal Intuitions I offer an alternative model of intelligence and learning, where learning can occur through physical interaction and multi-sensory engagement and intelligence can be distributed between humans, machines, and environments.

During the pandemic I took long walks in my city and noticed large gaps in the urban forest of Elm trees. I set out to photograph every stump and to learn everything I could about the trees. I began to dream of these trees becoming entities who could move and talk. These dreams became so sensorily vivid that I could recall them while awake. Though Arboreal Intuitions I materialize these dreams with light, sound, tactile objects, machine learning, robotic drawing, and human movement.

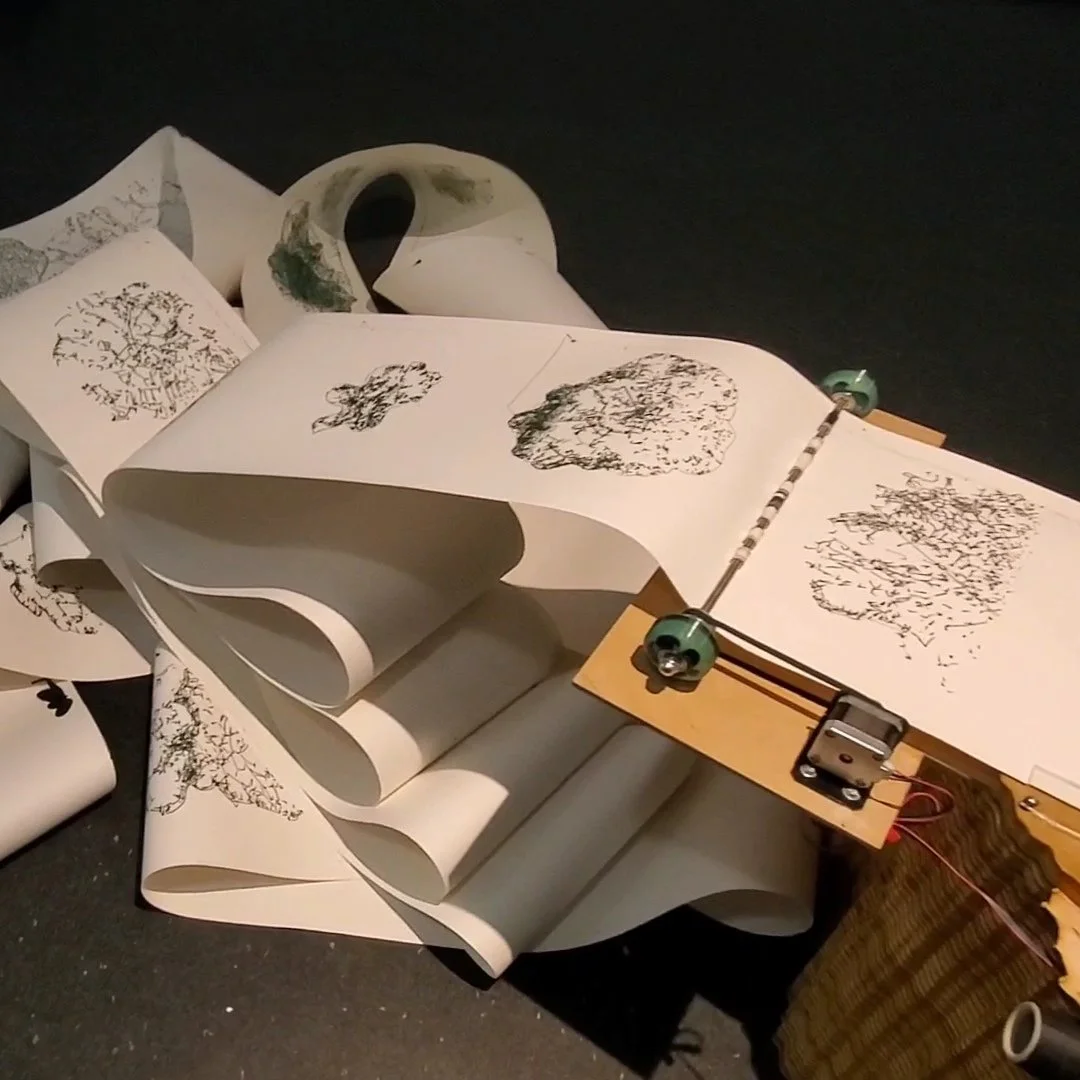

In the center of the gallery a laptop running the control system sits on an aluminum stand, it is connected to an audio amplifier with custom speakers and a robotic ink plotter which sits close to the stand on a wooden sculpture of a tree stump. A roll of paper is attached to the back of the stand and is fed through the back of the plotter, drawings of tree growth rings spill out the front of the plotter onto the floor.

Running at the same time, the control system generates a sound composition from a database of audio samples from field recordings made in the urban forest. The samples are mixed through a timing algorithm based on the seasonal life cycles of the numerous beings an elm tree hosts over its lifespan.

The sounds are played through speakers made from paper and sawdust; the speakers are designed to be touched so that sound can be felt through the body as well as heard through the ears.

The combination of all these elements creates an intelligence ecosystem that materializes the relationship between sensory learning and computational processes. Here images, data, humans, and machines work together to observe, recall, and communicate knowledges within an environment.

A sensor is attached to the wooden sculpture monitoring the space near the plotter. When an audience member approaches the plotter, the sensor is triggered, the control system advances the paper, and the plotter begins a new drawing.

Stored in the control system is an image database of tree growth rings generated by the ML model. It takes five to ten minutes to draw one image, when the drawing is complete the plotter stops, and the system waits for the next signal from the system.

Projection video 2:30 silent